1. Density and Point Probability

When we have a continuous distribution, such as a normal distribution (Gaussian), the density is a measure of the relative probability of getting a value close to x. So for a particular interval in the distribution, the probability of getting a value in the interval is the area under the curve.

For example, we can use the familiar 'bell-curve' otherwise known as a normal distribution. Through the console in R, we will create a plot showing the density of a normal distribution:

|

| Fig. 1: Creating a Density Plot for a Normal Distribution |

|

| Fig. 2: Density plot of a Standard Normal Distribution |

|

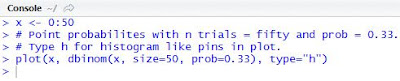

| Fig. 3: R Code for Plotting a Binomial Distribution |

|

| Fig. 4: Plot of a Binomial Distribution, Histogram Type |

2. Cumulative Probability

Cumulative probability distributions express the probability of 'hitting' a specified 'x' or less in a given distribution. I will not show plotting cumulative probability distributions, because most of the time, actually numbers are desired. So if we have a non-standard normal distribution of blood pressures with a mean of 132 and a standard deviation of 13. Suppose we encounter a patient with a blood pressure of 160 (systolic, hopefully). Our 'x' in this situation is 160, so what is the probability of patients with a blood pressure of 160 and less?

|

| Fig. 5: Cumulative Probability of a Normal Distribution with u=132 and sd=13 |

For discrete distributions, the cumulative probability holds the similar, as we use the pbinom function. Suppose we have 20 people choosing between Coke (A) and Pepsi (B), and say the preference is equal (p=0.5) due to a blind test. In the test, 16 people chose option A, Coke. How likely is it that Coke was chosen by 16 people over Pepsi?

|

| Fig. 6: Cumulative Probability of a Binomial Distribution with n=20 and prob=0.5 |

But what if we did not know which was better before the test, then we require adding the probability of extreme results going the other way. So we would include the chances of 4 or less people choosing option A (Coke) over B (Pepsi).

|

| Fig. 7: Cumulative Two-Tailed Probability of a Binomial Distribution, n=20, prob=0.5 |

3. Quantiles

Quantiles are the inverse of cumulative distribution functions, because a p-quantile finds the value where there is probability p of getting the value less or equal to it. So the median, is the value at which the distribution has half of its values less than it, also known as the 50% quantile. In the back of statistics books, you most likely will find fixed sets of probabilities tables that show the boundary at which a test statistic must pass to become significant at that level. They can range from 90%, 95%, or 99%, with the most often used at 95% level.

| Fig. 8: Formula for the 95% Confidence Interval of a Normal Distribution |

Here in Figure 8, we have the formula for a 95% confidence interval (CI). Given the vitals of a normal distribution, x bar (sample mean), sigma (standard deviation), n (sample size), we can derive the 95% CI for the u, which is the true mean. Suppose x bar= 83, sigma = 12, and n = 5 (small but okay). The standard error of the mean (sem) is the normalized factor adjusting the quantile coefficient (Figure 10) in the formula (Figure 8).

|

| Fig. 9: Code for Standard Error of the Mean and 95% CI |

In the code above, we see that the sem (5.367) is calculated by dividing the standard deviation by the square root of the sample size. The sem is then multiplied by the 0.025 and 0.975 quantiles (so there is 2.5% on each tail) then added to the xbar to get the confidence interval about the true mean. So with the lower and upper 95% confidence interval of this sample, we are certain that 72.48 and 93.52 covers the true mean.

|

| Fig. 10: qnorm Functions and Quantile Values for Standard Normal Distribution |

On a side note, the qnorm(k) function gives us the k-quantile value in a standard normal distribution where the % of values in the distribution is less than or equal to k. The 2.5% and 97.5% quantiles you will see often in statistics, which is usually abbreviated at -1.96 and 1.96, respectively. Between the 2.5% and 97.5% quantiles lies 95% of the values, hence, 95% confidence interval.

Here is some extra code for some fun:

|

| Fig. 11: Z Values, and 95% CI Calculation |

What we have here in Figure 11 is the deconstruction of the 95% CI values into the Z scores, which is the standard 95% quantiles at -1.96 and 1.96. As you can see, the square root of n divided by the standard deviation (opposite of sigma/sqrt(n)) is multiplied to the difference of the quantile value and the xbar. Next we have the concatenation of the 95% quantiles and using that to multiply the standard error of the mean to put the lower and upper quantiles together.

4. Pseudo-random Numbers

Random numbers drawn from R do not seem random at all; they are set by seed numbers, that is why they are called pseudo-random. These generated random numbers behave for practical purposes, as random. So they can be used to simulate sets of 'randomly' generated numbers.

|

| Fig. 12: Randomly Generated Sample Distributions |

Secondly, we can also adjust the arguments in the random distribution functions, such as adjusting the mean to 7, and standard deviation to 5, or setting the success of a binomial distribution at 10 with a sample size of 20 and a probability of success at 0.5.

There are many more types of distributions and statistical tests available in R, which build on the fundamental calculations on distributions in this post. We will explore these in later posts.

Thanks for reading,

Wayne

Straight away after Dr.Osauyi cast the Lottery spell for me, I felt enveloped by the control of the lotto spell. This spell changes my life into riches. I am now out of debts and experiencing the most amazing good luck with lotto after I won a huge amount of money. I have more luck now than I ever had in my life. i won 1.900.000 million pounds. Your Lotto spell made wonders, I can't believe it. Thank you so much, sir for dedicating your time to cast the Lotto spell for me.”I am eternally grateful for the lotto spell Dr.Osauyi did for me. You can email him for you own lottery number.email him on

ReplyDeleteLotterspellhelp@gmil.com

WhatsApp +905385050893,.