Welcome Back Readers,

Last time in the Text Mining Series we discussed how to retrieve tweets from the Twitter API with the twitteR package in R. Now that we have the text data to work with, we can now transform the text from their raw format into a corpus, which is a collection of text documents.

This post continues from where we left off in Text Mining 1. Read Text Mining 3 here. We require the packages: tm for text mining and SnowballC for word stemming to collapse words.

Now is a good time to download and load them in R- so let us get started!

Data.Frame to Corpus

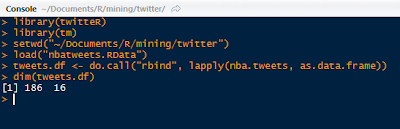

As we saw from last time, the raw text in the tweet list object was far from polished and would take some formatting to clean. We start with converting the tweet list object into a data.frame after we load the packages and set the working directory:

|

| Tweet List Object into Data.Frame Object |

As we can see, the dimensions of the tweets data.frame has 186 rows and 16 columns. The descriptions for each column are shown below:

|

| Tweet Data.Frame Column Structure |

We have columns for the tweet text, whether the tweet is favorited by the user (my account since my app accessed it), how many favorites if any, tweet ID, and so on. Note at the bottom, there are yes/no for longitude and latitude coordinates- so we can track the location where the tweet was sent.

Now that the data exists as a data.frame, we can convert it into a corpus using the Corpus() function from the tm package (the link opens to a pdf of the tm package).

|

| Tweets into Corpus |

Observe that the corpus list has 186 text documents, which means that the conversion process converted each tweet into a text document.

Next, we will transform the tweet corpus and the getTransformations() function displays the text transformations available for the tm_map() function in the text mining package. We have various transformations at our disposal, such as removing punctuation and removing numbers, words, and white space.

Transforming Text

First we will perform the following modifications using the tm_map() function: lowering character case, removing punctuation, removing numbers, and removing URLs. These are completed using the respective commands: tolower, removePunctuation, removeNumbers, and a regular expression substitution using gsub().

|

| Removing Case, Punctuation, Numbers and URLs |

The gsub() function allows us to replace the regular expression for an URL link, "http[[:alum:]]*" with "", a non-space for removal. Then we pass that function to the tm_map().

|

| Adding and Removing Stopwords |

Next we add stopwords. They are words that are ignored from searches with specific relevant topics and keyword combinations, increase the 'content' of the the tweet, and efficiency of analysis. They include, a, the, for, from, who, about, what, when, where, and more, depending on the analysis. Common stopwords, some even from Google Search filters out can be found here.

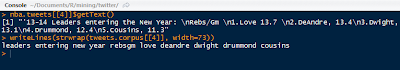

We can observe the changes of the text transformation, when we look at a random tweet. Say tweet #4 below. Note how all the punctuation was removed, as with the numbers, and newline escapes "\n", and that all characters are lowercase.

|

| Tweet 4 Before and After |

Next time around we shall discuss stemming the documents in the corpus we created and transformed in this post. Stemming involves cutting words shorter to retrieve their radicals. That way we can count their frequency despite the word being spelled differently or being used in a different tense. For example- updated, updating, update are 'stemmed' to updat.

And that is for next time! So stay tuned!

Thanks for reading,

Wayne

@beyondvalence

Thank you so much i really loved your tutorial very helpful

ReplyDelete