Hello Readers,

Welcome back folks. Today we will revisit time series through the lens of World Wheat Production Quantity and Harvest Area. I recently discovered a data repository called Data Market, which has data sets by industry, country, and other various topics and sources, including the United Nations.

This particular data set on wheat was provided by the Food and Agriculture Organization (FAO) of the United Nations. This UN organization helps developing and developed countries "improve agriculture, forestry, and fishing practices, and ensuring good nutrition and food security." They are headquartered in Rome, Italy.

| *Tasty Wheat* |

So wheat is an important crop, and many of us eat some form of that cereal grain everyday, whether it be as bread or cereal product. So let us start number munching!

Getting Wheat from (Data) Market to R

Many data sets exist on Data Market, and with a quick search, we can find our wheat production data. I found the wheat data sifting through in the Food and Agriculture Industry under the Crops header, shown below.

|

| Food and Agriculture Industry |

|

| Wheat Harvest Area and Production Quantity |

Now that we have the wheat CSV data, we can import it into R and begin the analysis.

Wheat Data

We saw what the data looked like online, and we would like to reproduce it in R. Let use plot the wheat time series data.

First read in the CSV file, and now we have 3 columns with 48 rows of data. The first column in wheat denotes years, the second- Area Harvested in hectares (Ha), and the third- Production Quantity in metric tonnes. Note the last row. It is a comment-NA and we need to remove it. Also, let us specify the beginning of the time series at year 1961 with 1 measurement per year.

We can do all this by selecting the elements of wheat to convert into a times series object. The first column is not required and the 48th row needs to be excluded, which gives us wheat[-48, 2:3]. The time units are described as starting in 1961, with 1 measurement per unit time.

|

| Reading in Wheat CSV |

|

| Plotting Wheat |

Wheat production in 1961 measured about 222 million metric tonnes and in 2007, it had increased to over 600 million metric tonnes. Surprisingly, the Harvest Area remained relatively stable from 1961 at 204 million hectares to over 214 million hectares in 2007.

Perhaps this relationship would be better visualized in a plot of the ratio of the two series. Take the the production series and divide it by the harvest area to obtain the ratio.

|

| Wheat Ratio Plot Code |

Observe the ratio close to 1.09 at 1961 and rising to around 2.82 in 2007. This is a 260% rise in wheat production efficiency per hectare over the 47 years.

Those figures are obtained below by dividing the ratio from 2007 by the ratio from 1961:

|

| Wheat Production per Hectare |

Time Series Distributions of Wheat

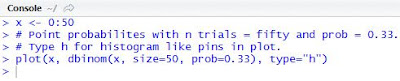

Before we dive into any time series decomposition, let us look at the distribution of wheat Production Quantity and Harvest Area first. Since the tonnes of the wheat produced and hectares used number in the hundred millions, we will make the production quantity and harvest area more manageable by passing it through the log(). Then we plot the differences between each measurement with diff() to see how the time series elements differ from each other.

|

| Plotting Wheat Distributions |

The abline(0, 0) simply adds a line y=0 to designate no difference between points as a reference.

Most of the distribution for Production Quantity (red) lies above y=0, indicating that the differences between each element are mostly positive, and the over all trend of the time series is increasing more than it is decreasing. On the other hand, Harvest Area differences do not appear to lean towards either direction, and is more stable than Production Quantity. These observations are confirmed when looking back at the Wheat Time Series Plot.

Distribution Histograms

Another way to visualize the distributions is with a histogram. First, we plot production quantity with hist() and specify that it plot the probability density. Next we overlay the probability density line to show how distribution of probabilities on the histogram. Then we assume the differences follow a normal distribution so we calculate the mean and the standard deviation from the differences of the log of production and plot the parametric normal distribution over the histogram as well.

|

| Histogram for Wheat Production Differences |

We can observe the more stable wheat harvest area distribution with the same code, only modified for column 1 of wheat, and different colors in the histogram.

|

| Plotting Wheat Harvest Differences |

After analyzing the distributions of both wheat production quantity and harvest area, we have a better grasp of the wheat time series data. Now we can move on to time series decomposition- in the next post! (Had to cut this post here; it was getting too lengthy.)

So stay tuned, and stay curious!

Thanks for reading,

Wayne

@beyondvalence